Why the need for Apogee?

At Breakpoint we are all about improving customers’ digital experiences. We understand the frustration of interrupted sessions and failed transactions, be it via mobile Internet or Unstructured Supplementary Service Data (USSD). But how do you gauge a user’s experience while accessing these applications? For example, how does one determine the success of a USSD shortcode that serves a mobile wallet to the end-user? Traditional monitoring only gives a view of the infrastructure, and even advanced application performance monitoring tools only give a view of the user sessions that made it to the servers. Because the service is not monitored from the user’s perspective, one cannot know what the true service state is. This is the problem that Apogee has been designed to address. Using remote monitoring appliances with unique capabilities, Apogee is able to monitor these ‘digital experiences’ via USSD, STK and other mobile channels.

How it works – in summary

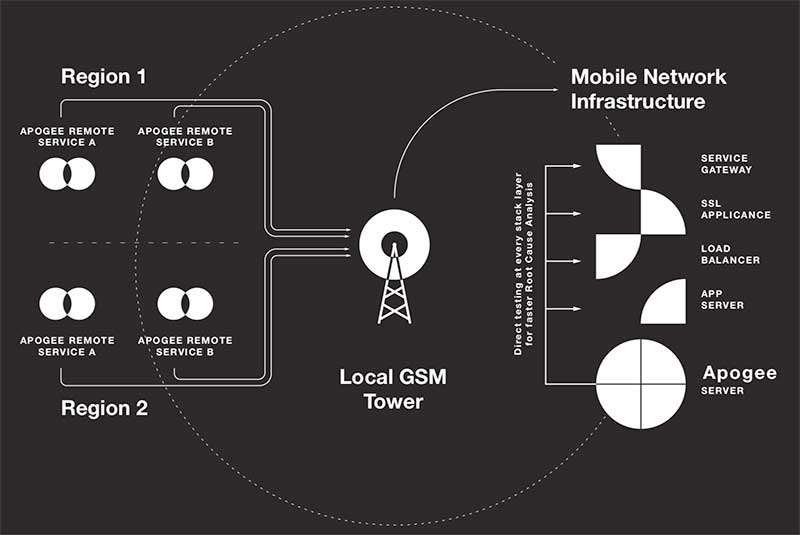

A software or hardware agent, or in the case of mobile channels, a GSM terminal, is connected to a remotely deployed appliance, which is under central command and control. A series of scripted actions (synthetic transactions) is then executed against the target channel that enables the system to mimic a real user’s actions while using a web, mobile or USSD-based (or other channel) application. The results of these actions, such as responses received, timing of various steps, errors and timeouts experienced, etc, are then recorded, packaged and submitted to a central server for storage, processing, analytics and visualisation.

Much research, proofs of concept, testing and experimentation was done before development of Apogee began. Here is a summary of the various phases of our research.

Testing feasibility

First we needed to determine which of our anticipated options for Apogee Remotes were feasible. For example, what can we accomplish purely with a software agent that could be deployed anywhere in the cloud? (As it turned out, quite a lot!) We had to determine whether a GSM terminal would be able to send USSD shortcodes and get responses, connect to a mobile app endpoint over 3G or LTE, or even process asynchronous transactions flows. One use case even called for an xDSL interface with an extremely low power budget, for which we had a cool bit of POC hardware developed. When this was all more or less sorted and we had prototype remotes built and coded up, we then needed to get the timing and response-checking just right. This involved measuring the time it took to complete each of the synthetic transaction sequences and then calculating the total elapsed duration, as well as checking the validity of the output parameters, and so on.

Identifying the right mobile interfaces

When it became apparent that our own custom-developed mobile hardware solution would not be feasible due to strict regulatory rules around telecommunications equipment, we approached various local companies for a suitable option. After initially testing a product that lacked a few necessary features, we identified and purchased a South African-designed and manufactured unit from RF Design, the GT-100, which supported multiple African operator networks as well as offered the missing features required for our purposes, in the form of the Ublox Leon G100 module. We have since gone through a few generations of this module, with good results.

Topology

From the very beginning a client/server topology was selected, where remotes with appropriate interfaces and mobile terminals are placed in various geographically or topologically disparate locations, and data would be sent to a centralised server that would process and display the performance of each test case. This enables regional and context-aware service monitoring for a clearer view of how services are delivered to your user base in various parts of the country, or over different carrier networks, or combinations of these factors.

Message Queue

Various queueing servers were investigated and it was discovered Redis fitted the requirement perfectly; providing support for golang and python, the API was quick to pick up and start prototyping, and it could handle a high number of clients. While communication between server and remote was not encrypted, this could be added using third party libraries.

Remote device options

Remote appliance proofs of concept included reviewing the Intel NUC (x86 based) and Raspberry PI (arm based). Both models provided enough processing power, but the Intel NUC edged ahead with configuration options, more memory and SSD storage, and provided physical security options. For purely mobile applications, a Mikrotik RouterOS-driven configuration with two LTE channels provided simple and robust options.

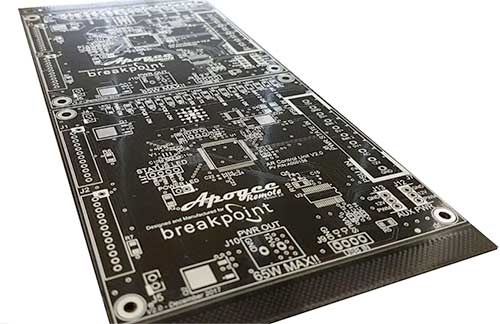

For larger scale deployments, the need for a custom circuit was evident when our initial attempts at repurposing other testing hardware for our requirements resulted in too many compromises and technical workarounds to remain feasible. So, we approached our local hardware partner, who designed and built a custom circuit for hosting up to eight mobile channels in a 2U enclosure, complete with battery backup and a watchdog circuit for remote restarts of single channels, all the channels or even the entire appliance.The modular nature of the custom circuit board and hardware interfaces also provided the option to exchange the modem interfaces based on local regulations and approvals in various territories.

Software research

The next phases in our research concerned the programming technology, which operating system to use, server programming options, and finally, our chosen database and user interface technologies, all of which will be covered in detail in an upcoming blog.